In this post I’ll describe how to migrate large data sets from an Azure SQL database to another. It’s a manual process, probably best suited for one-offs as it could take several hours depending on your server configuration and table size. I’m no data scientist, most of this knowledge was gathered from stackoverflow, so please handle with care.

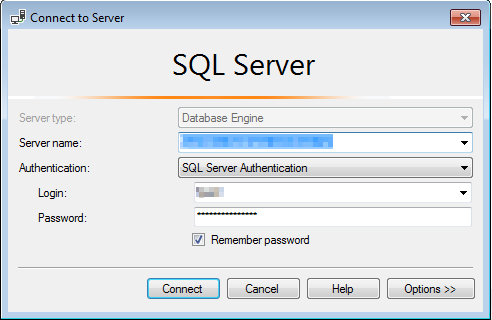

To communicate with Azure use Microsoft SQL Server Management Studio (SSMS).

-

Connect to the db and enter your Azure Database credentials

-

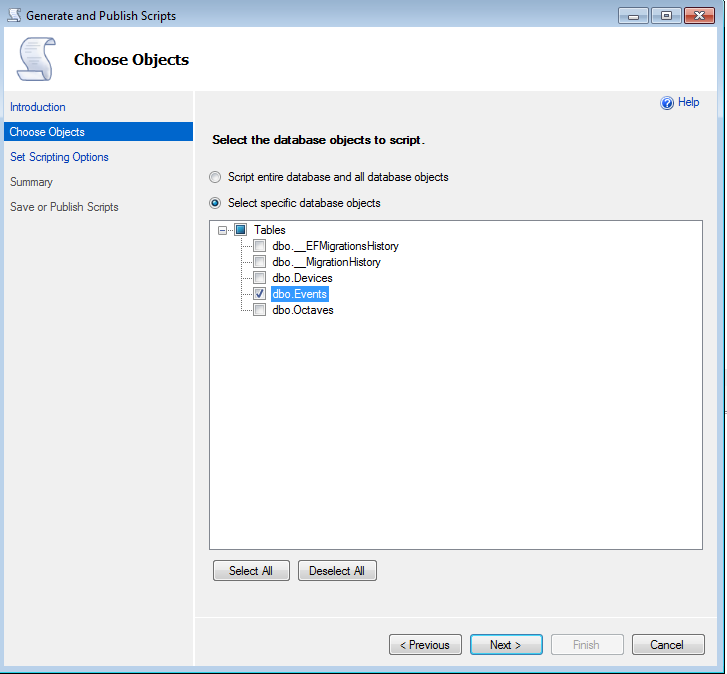

Right click on the database > “Generate scripts…”

-

Then “Select specific database objects” (here I’m just selecting a table each time to have more control over the process, but it should work also with more tables )

-

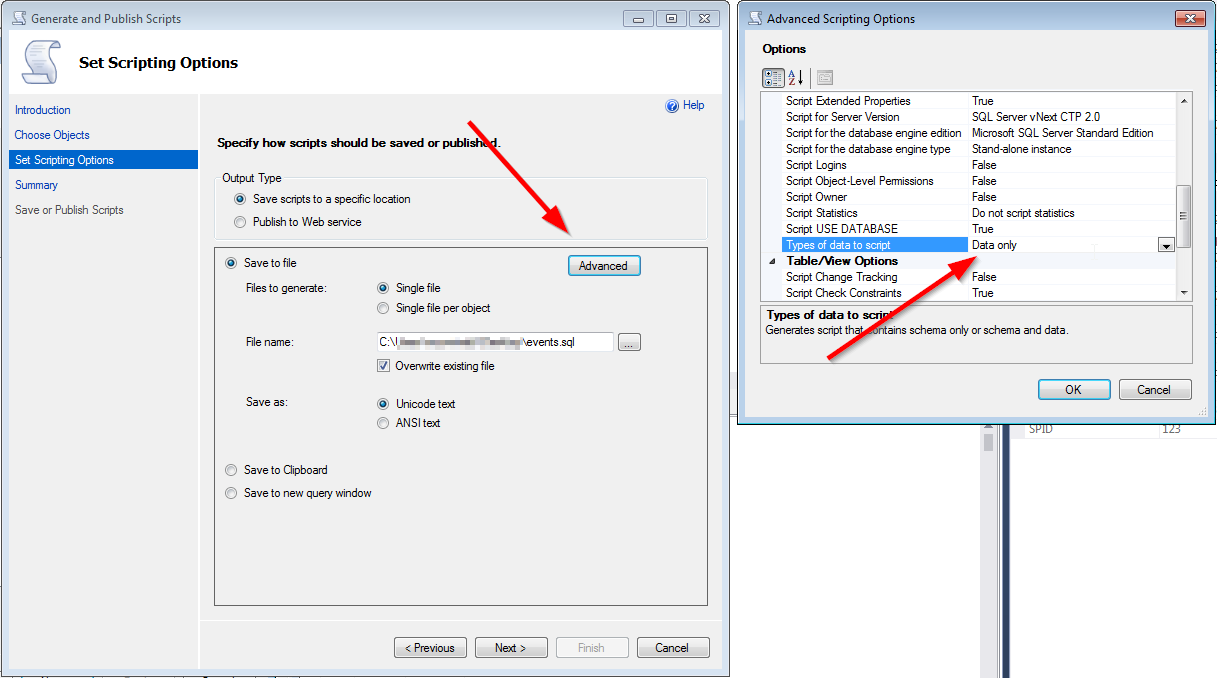

Next, click on “Advanced” and set “Types of data to script” to “Data only” (in this step, if the table is not very large, you could also just output it to a new query window and run the query to import it)

-

Finish the wizard and open the exported sql script

-

Remove the first two lines (the

USEandGOstatements weren’t needed in my case) and rename the table the the new db’s table name -

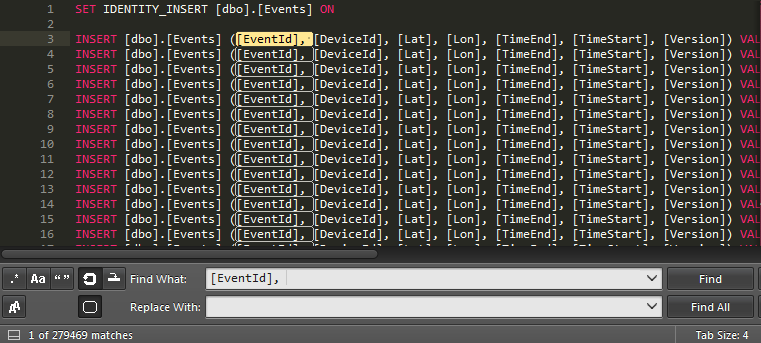

Unless the data structure matches the new table just fine you might need to tweak the data a bit more. In my case I wanted to remove the auto-incremented ` EventId` to avoid conflicts with existing rows, I used SublimeText to remove that column:

-

first replace

[EventId],with an empty string -

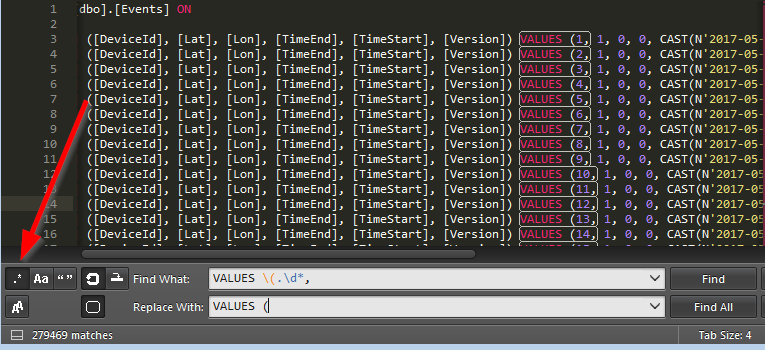

then replace the regex

VALUES \(.\d*,withVALUES ( -

if you remove this column, then also turn off

IDENTITY_INSERTby removing the first and last linesSET IDENTITY_INSERT [dbo].[Events] ONandSET IDENTITY_INSERT [dbo].[Events] OFFotherwise you’ll receive an error message

-

-

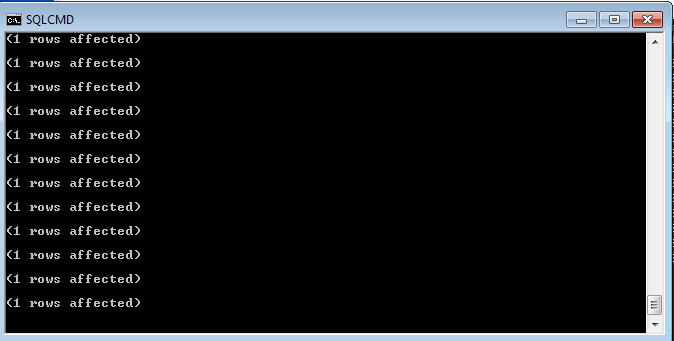

Open cmd and run the command below to import the table into the new db, replace the required <> fields with the new db connection info:

SQLCMD -S <SERVER-NAME> -d <DB-NAME> -U <USERNAME> -P "<PASSWORD>" -i <PATH-TO-YOUR-SQL-FILE>.sql -

If everything goes well you’ll see the message below until it’s complete